Paper Overview

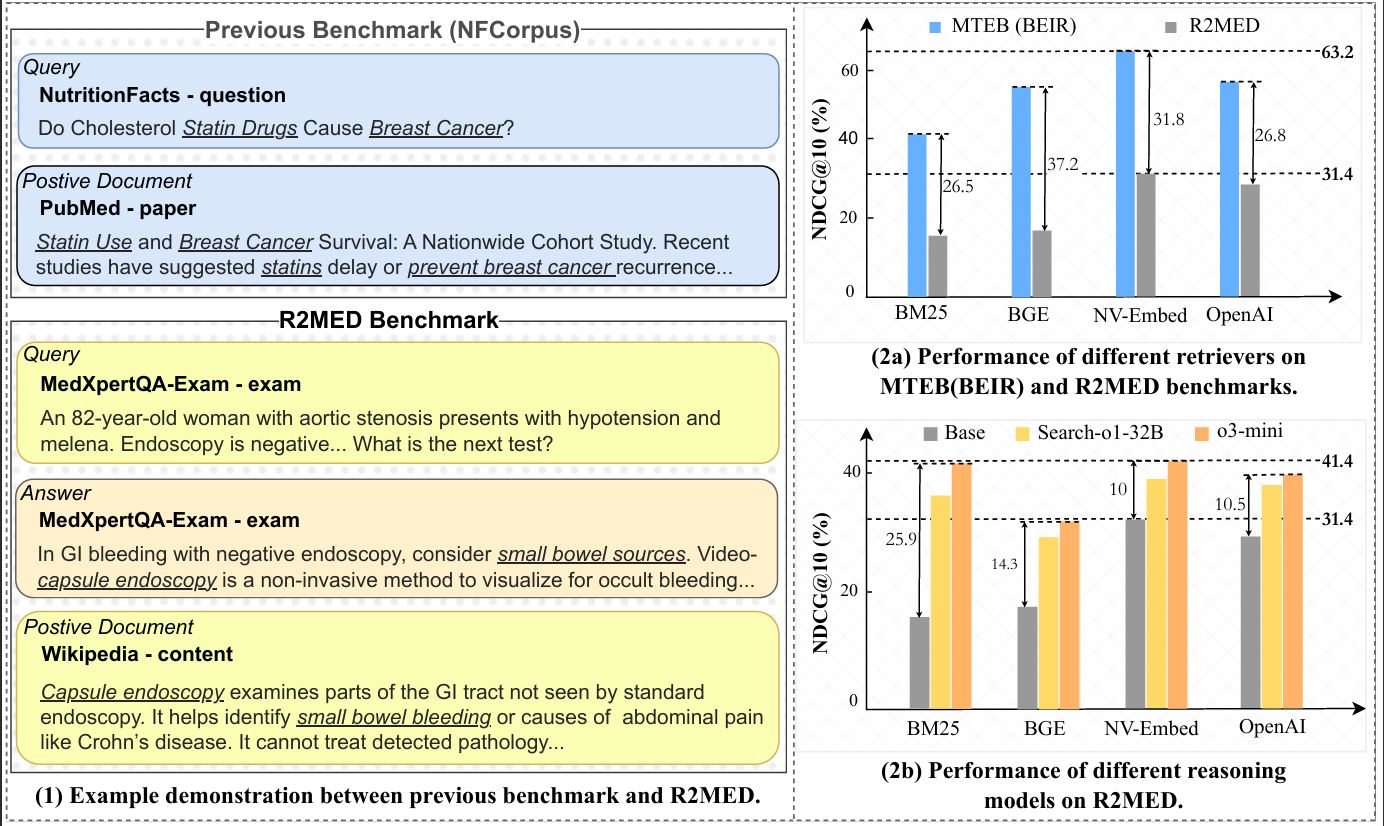

We introduce R2MED, the first benchmark explicitly designed for reasoning-driven medical retrieval. It comprises 876 queries spanning three tasks: Q&A reference retrieval, clinical evidence retrieval, and clinical case retrieval.

We evaluate 15 widely-used retrieval systems on R2MED and find that even the best model achieves only 31.4 nDCG@10, demonstrating the benchmark’s difficulty. Classical re-ranking and generation-augmented retrieval methods offer only modest improvements. Although large reasoning models improve performance via intermediate inference generation, the best results still peak at 41.4 nDCG@10.

These findings underscore a substantial gap between current retrieval techniques and the reasoning demands of real clinical tasks. We release R2MED as a challenging benchmark to foster the development of next-generation medical retrieval systems with enhanced reasoning capabilities.

We report the average nDCG@10 score across 8 datasets in R2MED. LLM+Retrievers means LLM generates answers before retrieval (HyDE), details please refer to https://github.com/R2MED/R2MED/tree/main/src .

🌟 Leaderboard submission 🌟

If you would like to submit your results to the leaderboard, email the results to

2021000171@ruc.edu.cn!

Optionally, you are encouraged to provide the link to the open-sourced codebase.

Otherwise, you may provide a short description on the used models and approaches

(e.g., size of retrieval model, whether LLMs like GPT-4 are used, etc.)!

| Rank | Rewriter | Retriever | Reranker | Avg. | Bio-logy | Bio-infor. | Medical-Sci. | MedXpert-QA. | MedQA-Diag | PMC-Treat. | PMC-Clin. | IIYi-Clin. |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| - |

BGE-Reasoner-Embed-0928 |

- | 43.18 | 54.01 | 66.33 | 67.64 | 20.93 | 27.96 | 51.38 | 33.76 | 23.43 | |

| - |

E5-mistral-7b-instruct |

ReasonRank(32B) |

42.85 | 45.56 | 67.73 | 63.45 | 18.90 | 30.60 | 41.08 | 46.11 | 29.35 | |

| o3-mini |

NV-Embed-v2 |

- | 41.35 | 34.01 | 55.90 | 51.28 | 28.99 | 40.30 | 48.97 | 50.86 | 20.47 | |

| o3-mini |

BM25 |

- | 41.01 | 59.65 | 46.56 | 47.17 | 34.64 | 55.22 | 41.65 | 35.32 | 7.86 | |

| HuatuoGPT-o1-70B |

NV-Embed-v2 |

- | 39.56 | 31.25 | 52.81 | 49.55 | 25.25 | 38.33 | 48.93 | 48.57 | 21.77 | |

| GPT4o |

NV-Embed-v2 |

- | 39.37 | 33.61 | 54.15 | 50.83 | 23.08 | 36.09 | 47.35 | 48.51 | 21.30 | |

| o3-mini |

Text-embedding-3-large |

- | 39.09 | 31.5 | 48.61 | 48.19 | 31.74 | 39.51 | 51.69 | 38.92 | 22.56 | |

| DeepSeek-R1-Distill-Llama-70B |

NV-Embed-v2 |

- | 38.52 | 32.83 | 53.31 | 50.32 | 22.98 | 33.78 | 47.04 | 46.53 | 21.35 | |

| HuatuoGPT-o1-70B |

Text-embedding-3-large |

- | 38.24 | 29.77 | 47.7 | 48.4 | 27.46 | 36.79 | 52.93 | 39.26 | 23.63 | |

| Search-O1 (QwQ-32b) |

NV-Embed-v2 |

- | 38.22 | 31.82 | 53.33 | 51.32 | 21.68 | 32.8 | 45.93 | 47.37 | 21.52 | |

| Search-O1 (Qwen3-32b) |

NV-Embed-v2 |

- | 38.00 | 33.46 | 51.04 | 50.20 | 22.91 | 32.02 | 46.88 | 46.18 | 21.32 | |

| Search-O1 (Qwen3-32b) |

Text-embedding-3-large |

- | 37.87 | 34.73 | 45.38 | 47.18 | 26.95 | 33.71 | 50.78 | 40.02 | 24.17 | |

| GPT4o |

Text-embedding-3-large |

- | 37.79 | 32.15 | 45.99 | 47.97 | 27.28 | 36.92 | 51.24 | 38.96 | 21.82 | |

| GPT4o |

BM25 |

- | 37.70 | 57.34 | 43.02 | 41.22 | 26.55 | 49.6 | 40.61 | 32.8 | 10.42 | |

| DeepSeek-R1-Distill-Llama-70B |

Text-embedding-3-large |

- | 37.36 | 30.08 | 47.44 | 48.98 | 24.94 | 33.24 | 50.49 | 41.39 | 22.32 | |

| Search-O1 (QwQ-32b) |

Text-embedding-3-large |

- | 37.30 | 32.43 | 46.95 | 47.84 | 25.19 | 31.22 | 51.67 | 36.58 | 26.51 | |

| Llama3.1-70B-Ins |

NV-Embed-v2 |

- | 36.82 | 31.21 | 52.27 | 51.19 | 17.48 | 27.53 | 46.96 | 46.90 | 21.05 | |

| QwQ-32B |

NV-Embed-v2 |

- | 36.82 | 32.26 | 52.43 | 49.91 | 21.08 | 31.29 | 46.14 | 41.06 | 20.38 | |

| QwQ-32B |

Text-embedding-3-large |

- | 36.51 | 32.51 | 45.46 | 46.76 | 24.81 | 31.76 | 52.78 | 35.1 | 22.89 | |

| DeepSeek-R1-Distill-Qwen-32B |

NV-Embed-v2 |

- | 36.08 | 33.10 | 51.82 | 49.39 | 18.78 | 27.38 | 45.94 | 42.16 | 20.05 | |

| Llama3.1-70B-Ins |

Text-embedding-3-large |

- | 35.76 | 31.31 | 46.83 | 48.14 | 21.42 | 28.32 | 51.22 | 37.11 | 21.7 | |

| Search-O1 (QwQ-32b) |

BM25 |

- | 35.57 | 60.78 | 41.58 | 43.93 | 24.07 | 39.94 | 37.52 | 28.38 | 8.34 | |

| Qwen2.5-32B-Ins |

NV-Embed-v2 |

- | 35.34 | 31.34 | 52.35 | 49.76 | 16.40 | 22.77 | 45.31 | 43.40 | 21.35 | |

| Search-R1 (Qwen2.5-7b-it-em-ppo) |

NV-Embed-v2 |

- | 35.11 | 30.84 | 50.66 | 49.07 | 15.05 | 20.46 | 47.36 | 45.49 | 21.95 | |

| QwQ-32B |

BM25 |

- | 35.03 | 58.24 | 42.35 | 42.2 | 23.7 | 38.12 | 41.65 | 26.66 | 7.34 | |

| DeepSeek-R1-Distill-Qwen-32B |

Text-embedding-3-large |

- | 34.68 | 30.39 | 44.89 | 47.62 | 20.33 | 27.89 | 49.04 | 36.26 | 21.04 | |

| Qwen2.5-32B-Ins |

Text-embedding-3-large |

- | 34.25 | 31.37 | 45.46 | 46.44 | 21.13 | 24.69 | 48.05 | 34.45 | 22.38 | |

| Search-O1 (Qwen3-32b) |

BM25 |

- | 33.84 | 56.84 | 39.48 | 43.01 | 22 | 34.42 | 41.62 | 26.2 | 7.16 | |

| HuatuoGPT-o1-70B |

BM25 |

- | 33.43 | 49.77 | 40.02 | 38.47 | 21.91 | 39.54 | 39.3 | 28.38 | 10.01 | |

| DeepSeek-R1-Distill-Llama-70B |

BM25 |

- | 33.29 | 49.52 | 38.86 | 39.48 | 22.12 | 38.4 | 34.95 | 33.86 | 9.13 | |

| Search-R1 (Qwen2.5-7b-it-em-ppo) |

Text-embedding-3-large |

- | 32.89 | 28.3 | 43.6 | 48.03 | 15.2 | 18.57 | 48.38 | 38.15 | 22.92 | |

| Qwen2.5-7B-Ins |

NV-Embed-v2 |

- | 32.69 | 30.12 | 49.95 | 49.39 | 13.37 | 19.49 | 42.99 | 38.36 | 17.86 | |

| Llama3.1-70B-Ins |

BM25 |

- | 32.40 | 52.54 | 39.42 | 41.05 | 16.99 | 33.87 | 37.32 | 28.67 | 9.32 | |

| Search-R1 (Qwen2.5-3b-it-em-ppo) |

NV-Embed-v2 |

- | 31.74 | 25.76 | 47.53 | 47.57 | 11.98 | 18.88 | 45.66 | 38.57 | 17.95 | |

| Qwen2.5-7B-Ins |

Text-embedding-3-large |

- | 31.53 | 30.15 | 42.33 | 45.79 | 15.45 | 19.73 | 48.64 | 30.39 | 19.79 | |

| - |

NV-Embed-v2 |

- | 31.43 | 27.15 | 50.10 | 47.81 | 10.90 | 16.72 | 44.05 | 39.91 | 14.81 | |

| o3-mini |

BGE-Large-en-v1.5 |

- | 31.29 | 22.18 | 37.62 | 43.97 | 25.39 | 34.91 | 44.55 | 24.65 | 17.04 | |

| - |

GritLM-7B |

- | 31.12 | 24.99 | 43.98 | 45.94 | 12.32 | 19.86 | 39.88 | 37.08 | 24.94 | |

| Qwen2.5-32B-Ins |

BM25 |

- | 31.12 | 52.88 | 39.2 | 42.42 | 16.8 | 25.87 | 33.27 | 26.72 | 11.81 | |

| - |

SFR-Embedding-Mistral |

- | 30.65 | 19.56 | 45.91 | 46.01 | 11.98 | 17.49 | 44.19 | 36.36 | 23.71 | |

| Search-R1 (Qwen2.5-3b-it-em-ppo) |

Text-embedding-3-large |

- | 30.19 | 24.48 | 40.91 | 47.65 | 11.62 | 16.78 | 47.79 | 32.27 | 20 | |

| - |

BMRetriever-7B |

- | 30.18 | 23.62 | 44.01 | 44.91 | 11.55 | 16.95 | 46.88 | 29.14 | 24.36 | |

| DeepSeek-R1-Distill-Qwen-32B |

BM25 |

- | 29.05 | 48.9 | 38.8 | 38.28 | 16.04 | 25.21 | 31.55 | 22.47 | 11.15 | |

| HuatuoGPT-o1-70B |

BGE-Large-en-v1.5 |

- | 28.98 | 18.88 | 35.5 | 39.72 | 22 | 27.48 | 43.29 | 26.46 | 18.5 | |

| Search-O1 (QwQ-32b) |

BGE-Large-en-v1.5 |

- | 28.66 | 21.92 | 32.34 | 42.71 | 20.1 | 24.34 | 42.91 | 26.31 | 18.66 | |

| GPT4o |

BGE-Large-en-v1.5 |

- | 28.63 | 22.59 | 32.97 | 41.29 | 19.45 | 27.18 | 45.43 | 23.28 | 16.85 | |

| - |

Text-embedding-3-large |

- | 28.57 | 23.82 | 40.51 | 44.05 | 11.78 | 15.01 | 47.43 | 28.87 | 17.12 | |

| DeepSeek-R1-Distill-Llama-70B |

BGE-Large-en-v1.5 |

- | 28.41 | 21.68 | 34.79 | 40.33 | 19.06 | 23.93 | 42.74 | 26.44 | 18.28 | |

| Search-O1 (Qwen3-32b) |

BGE-Large-en-v1.5 |

- | 28.28 | 23.88 | 30.34 | 42.16 | 18.48 | 25.49 | 42.96 | 25.46 | 17.47 | |

| Llama3.1-70B-Ins |

BGE-Large-en-v1.5 |

- | 28.18 | 21.4 | 37.06 | 41.44 | 15.91 | 22.85 | 45.78 | 24.61 | 16.36 | |

| - |

Voyage-3 |

- | 27.34 | 25.42 | 38.98 | 41.63 | 8.74 | 9.36 | 45.28 | 28.68 | 20.64 | |

| QwQ-32B |

BGE-Large-en-v1.5 |

- | 26.59 | 21.01 | 32.89 | 39.45 | 16.68 | 22.55 | 43.4 | 20.75 | 15.95 | |

| DeepSeek-R1-Distill-Qwen-32B |

BGE-Large-en-v1.5 |

- | 26.40 | 19.61 | 33.42 | 40.76 | 14.78 | 19.3 | 42.38 | 23.84 | 17.1 | |

| Qwen2.5-7B-Ins |

BM25 |

- | 26.38 | 48.49 | 29.38 | 41.75 | 12.17 | 19.48 | 26.63 | 24.48 | 8.67 | |

| Qwen2.5-32B-Ins |

BGE-Large-en-v1.5 |

- | 26.19 | 23.6 | 33.76 | 41.51 | 14.41 | 17.98 | 39.77 | 22.23 | 16.22 | |

| - |

E5-mistral-7b-instruct |

- | 24.92 | 18.81 | 42.86 | 41.77 | 6.70 | 11.54 | 23.58 | 31.17 | 22.93 | |

| Search-R1 (Qwen2.5-7b-it-em-ppo) |

BGE-Large-en-v1.5 |

- | 24.78 | 19.14 | 30.38 | 37.31 | 12.53 | 14.81 | 38.93 | 24.51 | 20.6 | |

| - |

BMRetriever-2B |

- | 24.69 | 19.50 | 33.30 | 39.45 | 9.97 | 9.31 | 38.01 | 25.65 | 22.30 | |

| Search-R1 (Qwen2.5-7b-it-em-ppo) |

BM25 |

- | 24.56 | 36.51 | 26.92 | 34.62 | 10.02 | 13.88 | 34.98 | 28.18 | 11.37 | |

| Qwen2.5-7B-Ins |

BGE-Large-en-v1.5 |

- | 24.00 | 19.89 | 30.18 | 40.7 | 12.98 | 15.05 | 41.02 | 17.09 | 15.07 | |

| Search-R1 (Qwen2.5-3b-it-em-ppo) |

BGE-Large-en-v1.5 |

- | 22.14 | 16.8 | 26.83 | 36.74 | 8.4 | 12.17 | 37.15 | 22.84 | 16.16 | |

| Search-R1 (Qwen2.5-3b-it-em-ppo) |

BM25 |

- | 20.03 | 34.38 | 20.31 | 30.04 | 5.47 | 13.18 | 31.66 | 19.69 | 5.53 | |

| - |

InstructOR-XL |

- | 18.13 | 21.56 | 32.91 | 36.79 | 4.63 | 4.29 | 14.18 | 14.49 | 16.17 | |

| - |

BMRETRIEVER-410M |

- | 18.10 | 12.37 | 29.92 | 31.26 | 4.46 | 6.28 | 25.31 | 17.46 | 17.73 | |

| - |

BGE-Large-en-v1.5 |

- | 17.02 | 12.71 | 27.04 | 27.76 | 4.10 | 8.33 | 26.45 | 15.06 | 14.72 | |

| - |

InstructOR-L |

- | 16.21 | 15.82 | 29.71 | 36.88 | 3.84 | 4.81 | 15.84 | 9.02 | 13.77 | |

| - |

BM25 |

- | 15.13 | 19.19 | 21.55 | 19.68 | 0.66 | 2.55 | 23.69 | 21.66 | 12.02 | |

| - |

Contriever |

- | 11.76 | 9.15 | 18.02 | 25.22 | 1.71 | 2.52 | 11.47 | 13.40 | 12.57 | |

| - |

MedCPT |

- | 9.02 | 2.15 | 17.57 | 14.74 | 1.68 | 2.02 | 11.33 | 14.62 | 8.03 |

@article{li2025r2med,

title={R2MED: A Benchmark for Reasoning-Driven Medical Retrieval},

author={Li, Lei and Zhou, Xiao and Liu, Zheng},

journal={arXiv preprint arXiv:2505.14558},

year={2025}

}